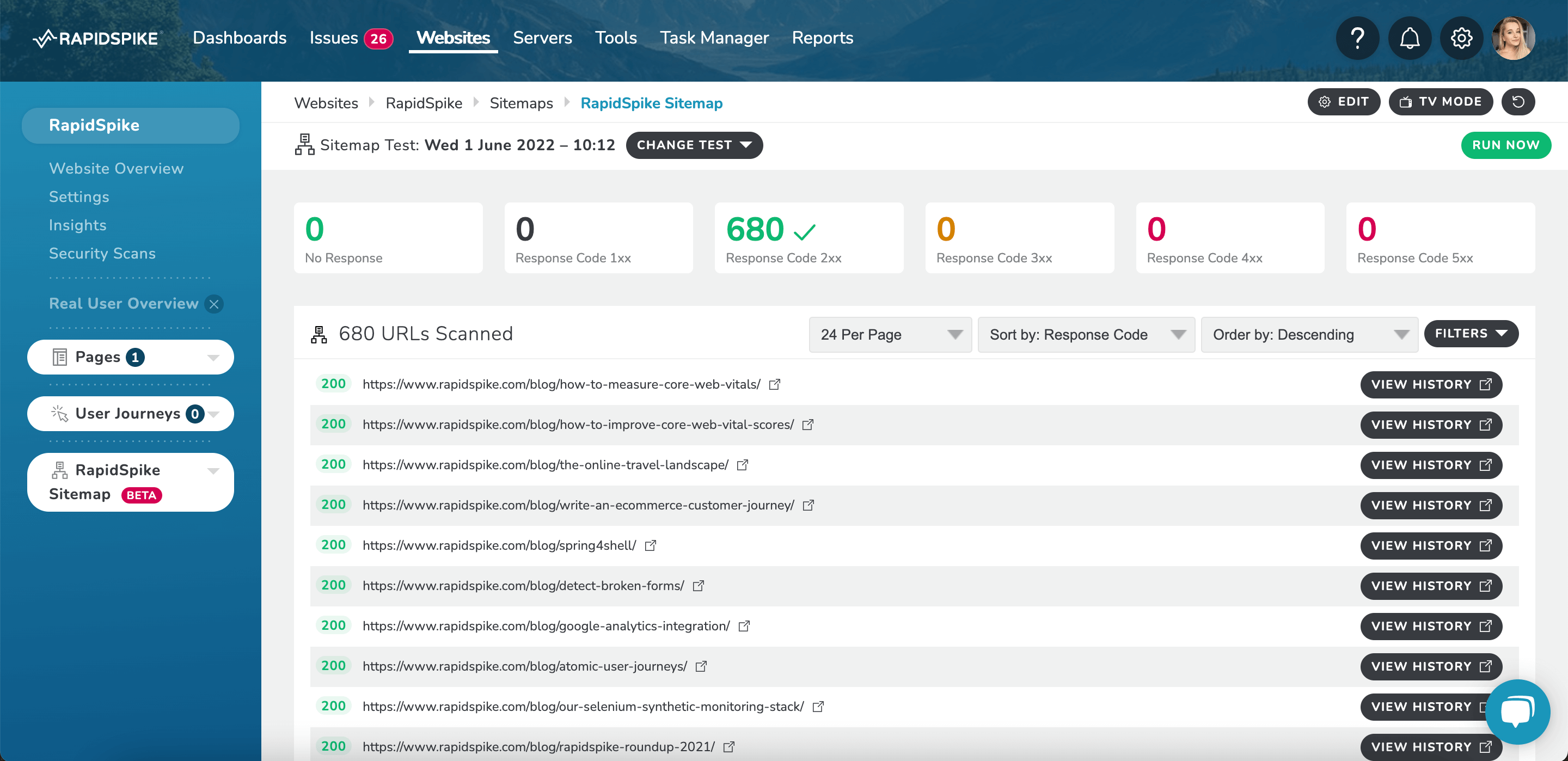

New Feature: Sitemap Monitoring

Our feature development has always been heavily influenced by our users. Some of our most popular features were directly requested by people using our products on a daily basis, which we believe is one of the best ways of developing a product such as ours. If one person wants to monitor something, chances are others will do too. Which is how our new feature: Sitemap Monitoring was incepted!

The chances are that if your website is managed via a CMS or similar then it will autogenerate an XML sitemap according to the Sitemap Protocol. They are dynamically generated lists of all the pages, images, videos or other content on a website (i.e. a glossary of things) and are laid out in a standardised way that scrapers and bots, such as Google’s, can consume efficiently. Sitemaps can even include links to other sitemaps, for example, our website has multiple sitemaps which are found in a ‘sitemap index’.

Sitemaps are essential for telling search engines about the pages and content on your site that are important and that they should rank – they’re worth monitoring and validating for this reason alone. Sitemap Monitoring gives you the ability to stay on top of all the links your website presents as important to the world.

The configuration options are simple:

- Test from multiple global regions

- Hourly down to weekly test frequencies

We will test the contents of your sitemap according to these settings, then you can view and filter the sitemap test history, view individual URL histories and generate reports.

This mechanism has been built in a way that is easily extendable. This means that we can simply add new ‘consumers’ that interface with other sources other than just XML sitemaps. We plan to extend this feature to handle HTML sitemaps, lists of landing pages from Google Analytics and remote JSON files.

Use Cases

Imagine you have a page that a user is taken to as the result of the submission of a contact form. The page is only accessible via this path and does not work if visited directly – it shows a 404 error. Now if this page is in the sitemap and being ranked by Google, visitors may end up there and be presented with this error – this isn’t ideal and should be fixed by excluding the page from the sitemap.

Let’s say you’ve just re-categorised the main pages on your website and this has introduced an additional segment into their URLs. For example, “/about-us” is now “/company/about-us”. If your sitemap still lists the original, uncategorised link then Google may still present this in search results and therefore visitors may be met with a 404 error. Here, you would need to know this so you could amend the sitemap to include the correct categories.

The intention in both of these examples is not to ensure the resulting page is online and operational, but rather to validate that the links that search engines rank should even be ranked at all and that they are correct.